Today AI and Natural Language Processing is gaining rapid significance, specifically with no-code AI-driven platforms becoming a boon for us. It showcases NLP’s growth, which is expected to increase nearly 14x times in 2025, taking off from approximately $3 billion to $43 billion.

Within this significant landscape, Custom LLMs have gained popularity for their ability to comprehend and generate unique solutions. Many pre-trained modules like GPT-3.5 by Open AI help to cater to generic business needs. As every aspect has advantages and disadvantages, the most exceptional LLMs may also face difficulties with specific tasks, industries, or applications.

So how can you overcome these challenges?

The process of fine-tuning Custom LLMs helps you solve your unique needs in these specific contexts. By customizing and refining the LLMs, businesses can leverage their potential and achieve optimal performance in targeted scenarios.

In this blog, we will discuss the importance of customizing Custom LLMs to improve their performance. We will explore different techniques and strategies that can be implemented in these models for specific tasks and applications.

Related read: Building Your First LLM Application: A Beginner’s Guide

With fine-tuning, you are enabled to extract the task-specific features from the pre-trained Custom LLMs. These features are important to understanding the intricacies of the task and can greatly improve model performance.

You are specific to fine-tuning the layers of models, focusing on those that capture high-level domain-specific information. This approach helps maintain a general understanding of language while refining the model for the intended task.

Fine-tuning offers the flexibility to adjust learning rates, striking a balance between the overarching patterns acquired during pre-trained and the important details needed for Custom LLMs task-specific performance.

With fine-tuning, you can experiment with different batch sizes and epochs, while customizing the training process to the characteristics of the new data.

The process facilitates the transfer of knowledge from the pre-trained model to the specific domain of interest, empowering when dealing with limited data for the target task with Custom LLMs.

Fine-tuning provides a valuable opportunity to address any inherent bias present in the pre-trained model. It enables the creation of a customized model that aligns with the particular requirements of the application.

Fine-tune techniques incorporate regularization effectively protecting against overfitting on task-specific data for Custom LLMs. It ensures the model maintains a strong ability to generalize well, improving performance and reliability.

Experts can fine-tune the model iteratively, observing performance on validation data and making changes as needed. Such controlled adaption is important for achieving optimal results.

Customizing LLMs for specific tasks involves a systematic process that includes domain expertise, data preparation, and model adaption. The whole journey from choosing the right pre-trained model to fine-tuning for optimal performance needs careful consideration and attention to detail. To simplify this for you, we have provided a step-by-step guide to the process.

When you begin with a specific task, it is important to clearly define the objective and desired goals. Identify your key requirements which ensure the results align with your expectations.

To effectively address your task, you need to consider the nature of the data at hand. Understand their characteristics such as size, complexity, and relevance to the application. Now you are ready to customize your approach to the task with a well-defined motive towards the LLMs in hand.

When considering pre-trained models for your task, it is important to evaluate them based on their architecture, size, and relevance to the specific task at hand, especially with Custom LLMs. Consider whether the model’s structure aligns with the requirements of your tasks and assess its size for the available resources. The model’s performance on similar tasks should be assessed to capture relevant features.

Collect all the relevant datasets to encompass the significant information and examples for Custom LLMs. This dataset will serve as the foundation for training and assessing your selected models.

Once you have all your collected data, the next crucial step is to clean and preprocess it. The process involves ensuring consistency and compatibility with the chosen pre-trained model. Evaluate any issues such as missing values, outliers, or anomalies in the dataset that may affect the quality of your results.

Such important steps can help you create a standardized and reliable dataset that aligns with the requirements of both your task and the chosen model.

While working with a pre-trained model, it’s important to customize the architecture to align with your specific tasks. In modification of architecture, you can make changes to the layers, structure, or aspects of the model to align it with the requirement.

The output layer of a model generates predictions or classifies inputs into separate categories, a crucial step in Custom LLMs. To ensure relevant results, you need to adjust the output layer based on two factors: the number of classes and the nature of the target variable.

Fine-tuning involves making adjustments to the pre-trained layers of the model to enhance its performance on your specific tasks. The complexity of your task plays an important role in determining how much fine-tuning is needed. For simpler tasks, you may need to make minor changes, while more complex tasks may require deeper adjustments or even retaining certain layers.

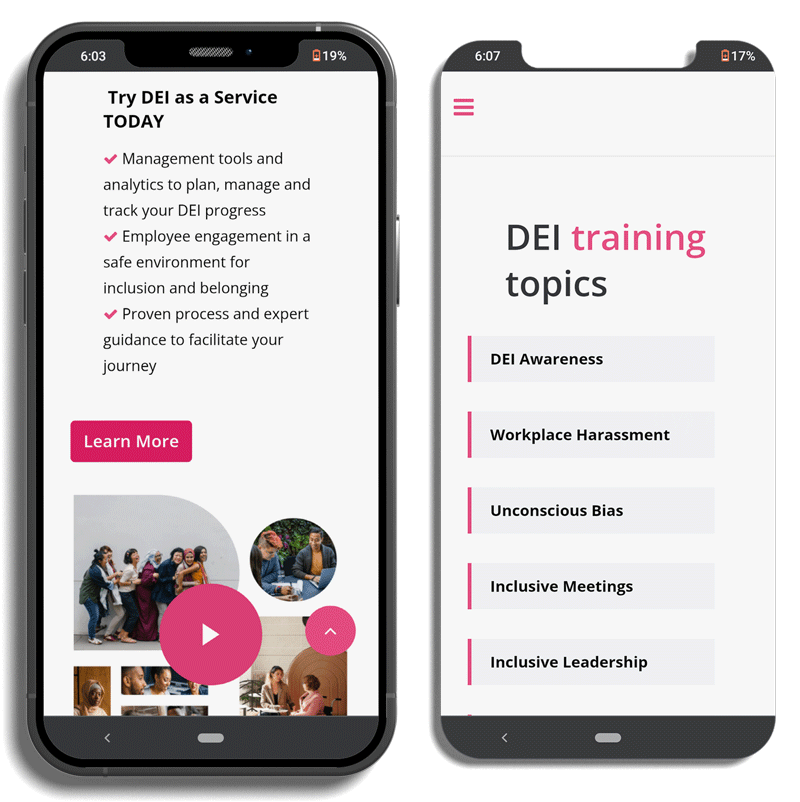

Delve into the journey of a leading DEI Operating System, as it overcomes the challenges within its internal features. Faced with an old user interface and inefficient functionality, the organization recognized the need for modernization to improve user experience and efficiency. Through strategic solutions and innovative enhancements, we navigated these challenges, reshaped office dynamics, and established a more inclusive workplace environment.

When working with Custom LLMs, starting with a pre-trained model helps gather general patterns and features from the original dataset. Leveraging this knowledge enhances the model’s understanding.

Fine-tuning entails training the model on a task-specific dataset, refining its representations for your specific task. Monitoring its performance on a separate validation dataset is crucial during training. This allows evaluation of generalization to new data and prevents overfitting. Frequent monitoring facilitates informed decisions on adjusting hyperparameters or stopping training.

To evaluate the performance of the model, it is important to assess its performance on the test set, a crucial step in Custom LLMs. This monitoring provides valuable insights into how well the model generalizes to unseen data and performs in real-world settings.

It is essential to analyze metrics relevant to the specific task at hand, such as accuracy, precision, recall, and others. These metrics offer an understanding of the model’s performance, guiding adjustments and refinements to enhance its effectiveness.

After you are done with fine-tuning and optimizing the model, now you deploy it to the target environment where it will be used in real-world scenarios, a crucial step in Custom LLMs. The process involves setting up the necessary infrastructure, such as servers or cloud platforms, to host the model and make it accessible to users or other systems.

The next step is to collect data on how the model is performing, measuring key metrics, and analyzing its behavior in different use cases. Feedback loops help in the continuous improvement of the deployed model, involving collecting feedback from users, stakeholders, or other sources to gain insights into how well the model is meeting their needs and expectations.

To ensure effective collaboration and future maintenance of the Custom LLMs, it is important to document the entire process. The documentation should have the decisions made, parameters used, and outcomes observed throughout the process.

Provide an overview of the project and the purpose of customizing the model. Describe the data used for training and fine-tuning the model. You can include details about the data sources, preprocessing steps, and any data augmentation techniques applied.

Related read: The Top Most Useful Large Language Model Applications

In the dynamic landscape of natural language processing, the customization of Custom LLMs for specific tasks stands as a powerful beacon for innovation and problem-solving. As we explored some important processes of customizing pre-trained models to unique applications, the importance of this approach becomes evident.

Customization, backed by a fine-tuning process, allows practitioners to strike a balance between the understanding embedded in pre-trained models and the intricacies of task-specific domains. The adaptability of LLMs empowers industries and researchers to leverage the capabilities of these models across different applications, i.e. from healthcare and financial services to customer and support services.

At Mindbower, we help you explore extraordinary opportunities for customization in LLMs, establishing advancements in natural language understanding and problem-solving. Whether you are delving into sentiment analysis, entity recognition, or another specialized task we guide you to unleash the full potential of language models.

A comprehensive guide for the successful adoption of Generative AI

We worked with Mindbowser on a design sprint, and their team did an awesome job. They really helped us shape the look and feel of our web app and gave us a clean, thoughtful design that our build team could...

The team at Mindbowser was highly professional, patient, and collaborative throughout our engagement. They struck the right balance between offering guidance and taking direction, which made the development process smooth. Although our project wasn’t related to healthcare, we clearly benefited...

Founder, Texas Ranch Security

Mindbowser played a crucial role in helping us bring everything together into a unified, cohesive product. Their commitment to industry-standard coding practices made an enormous difference, allowing developers to seamlessly transition in and out of the project without any confusion....

CEO, MarketsAI

I'm thrilled to be partnering with Mindbowser on our journey with TravelRite. The collaboration has been exceptional, and I’m truly grateful for the dedication and expertise the team has brought to the development process. Their commitment to our mission is...

Founder & CEO, TravelRite

The Mindbowser team's professionalism consistently impressed me. Their commitment to quality shone through in every aspect of the project. They truly went the extra mile, ensuring they understood our needs perfectly and were always willing to invest the time to...

CTO, New Day Therapeutics

I collaborated with Mindbowser for several years on a complex SaaS platform project. They took over a partially completed project and successfully transformed it into a fully functional and robust platform. Throughout the entire process, the quality of their work...

President, E.B. Carlson

Mindbowser and team are professional, talented and very responsive. They got us through a challenging situation with our IOT product successfully. They will be our go to dev team going forward.

Founder, Cascada

Amazing team to work with. Very responsive and very skilled in both front and backend engineering. Looking forward to our next project together.

Co-Founder, Emerge

The team is great to work with. Very professional, on task, and efficient.

Founder, PeriopMD

I can not express enough how pleased we are with the whole team. From the first call and meeting, they took our vision and ran with it. Communication was easy and everyone was flexible to our schedule. I’m excited to...

Founder, Seeke

We had very close go live timeline and Mindbowser team got us live a month before.

CEO, BuyNow WorldWide

If you want a team of great developers, I recommend them for the next project.

Founder, Teach Reach

Mindbowser built both iOS and Android apps for Mindworks, that have stood the test of time. 5 years later they still function quite beautifully. Their team always met their objectives and I'm very happy with the end result. Thank you!

Founder, Mindworks

Mindbowser has delivered a much better quality product than our previous tech vendors. Our product is stable and passed Well Architected Framework Review from AWS.

CEO, PurpleAnt

I am happy to share that we got USD 10k in cloud credits courtesy of our friends at Mindbowser. Thank you Pravin and Ayush, this means a lot to us.

CTO, Shortlist

Mindbowser is one of the reasons that our app is successful. These guys have been a great team.

Founder & CEO, MangoMirror

Kudos for all your hard work and diligence on the Telehealth platform project. You made it possible.

CEO, ThriveHealth

Mindbowser helped us build an awesome iOS app to bring balance to people’s lives.

CEO, SMILINGMIND

They were a very responsive team! Extremely easy to communicate and work with!

Founder & CEO, TotTech

We’ve had very little-to-no hiccups at all—it’s been a really pleasurable experience.

Co-Founder, TEAM8s

Mindbowser was very helpful with explaining the development process and started quickly on the project.

Executive Director of Product Development, Innovation Lab

The greatest benefit we got from Mindbowser is the expertise. Their team has developed apps in all different industries with all types of social proofs.

Co-Founder, Vesica

Mindbowser is professional, efficient and thorough.

Consultant, XPRIZE

Very committed, they create beautiful apps and are very benevolent. They have brilliant Ideas.

Founder, S.T.A.R.S of Wellness

Mindbowser was great; they listened to us a lot and helped us hone in on the actual idea of the app. They had put together fantastic wireframes for us.

Co-Founder, Flat Earth

Ayush was responsive and paired me with the best team member possible, to complete my complex vision and project. Could not be happier.

Founder, Child Life On Call

The team from Mindbowser stayed on task, asked the right questions, and completed the required tasks in a timely fashion! Strong work team!

CEO, SDOH2Health LLC

Mindbowser was easy to work with and hit the ground running, immediately feeling like part of our team.

CEO, Stealth Startup

Mindbowser was an excellent partner in developing my fitness app. They were patient, attentive, & understood my business needs. The end product exceeded my expectations. Thrilled to share it globally.

Owner, Phalanx

Mindbowser's expertise in tech, process & mobile development made them our choice for our app. The team was dedicated to the process & delivered high-quality features on time. They also gave valuable industry advice. Highly recommend them for app development...

Co-Founder, Fox&Fork